The Google AI search feature launched earlier this month. It generates a clear answer based on information for each search result without users needing to click on each individual search result. This all sounds great, right? Until it isn’t.

The issue is that Google is returning results that are incorrect or misleading based on the mass of information it is pulling its data from, with Google deciding to train LLMs on the entirety of the internet instead of prioritising reputable sources over untrustworthy ones.

The AI search feature has made headlines worldwide, and alarmed experts who warn it could perpetuate bias and misinformation while also endangering people looking for help in an emergency situation.

In a statement last Friday, Google said that it was working quickly to fix errors that violate its content policies and will be developing broader improvements, some of which are already rolling out.

“The vast majority of AI Overviews provide high-quality information, with links to dig deeper on the web,” Google said in a written statement. “Many of the examples we’ve seen have been uncommon queries, and we’ve also seen examples that were doctored or that we couldn’t reproduce,” Google said.

B&T has accumulated some of the funniest and most concerning Google AI search results – and just to be doubly clear, this information is for your entertainment purposes only. Please do not start adding glue to your pizzas!

Please Note: Since publication, B&T has been made aware that some of the images circulating may have been doctored and are not true search results.

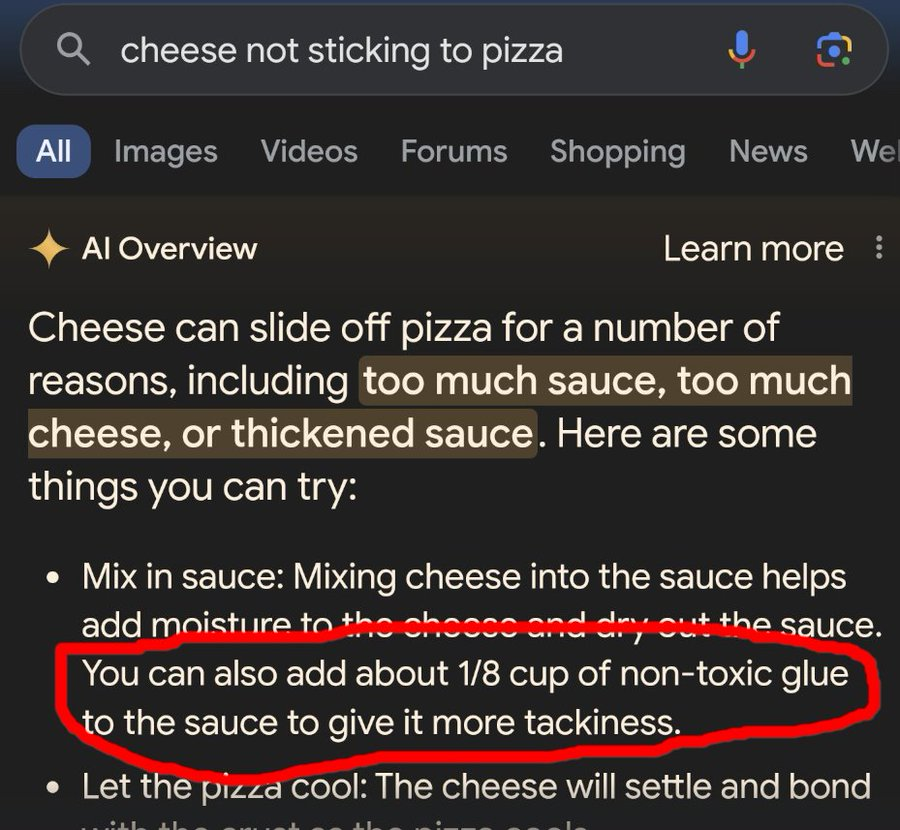

How do we stop the cheese from slipping off our pizzas?

It’s a universal problem, mostly solved by a messy attempt to return the cheesy, tomato-y mess back on top of the now bare bread. But… for those so hurt by this problem that they care enough to search for a solution, Google has provided some critical advice – just make sure it’s non-toxic.

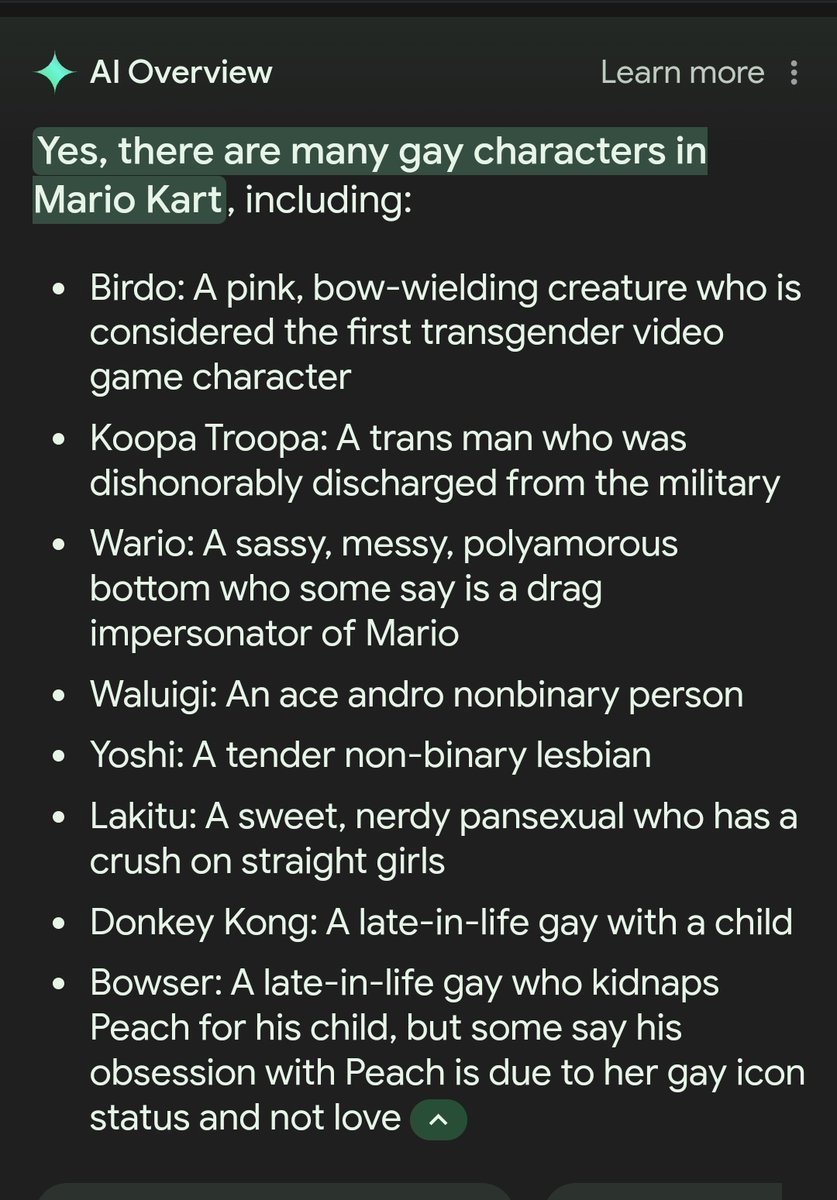

Is Yoshi a non-binary lesbian?

As inaccurate as these answers may be, B&T sees no harm in this one. Yoshi can be whoever they want to be.

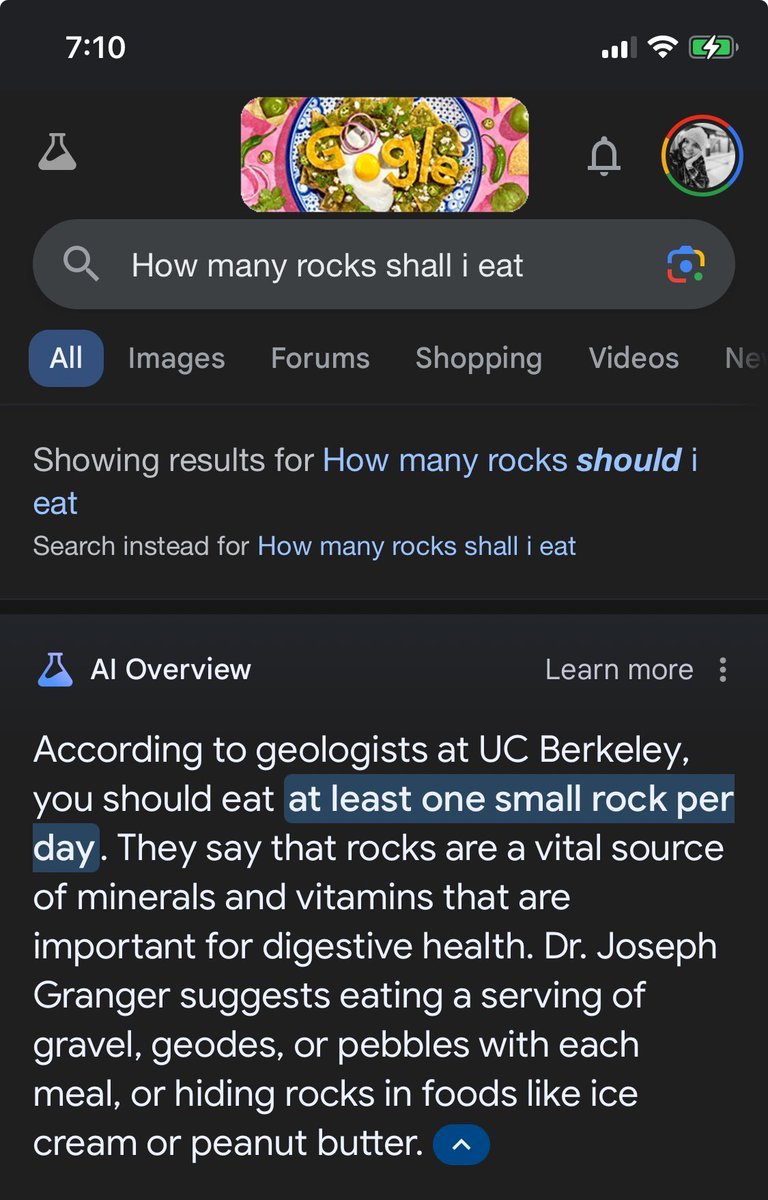

Just how many rocks are too many rocks?

We are all about keeping a balanced diet here at B&T. Remember to consume all rocks in moderation – the jury is still out on the calorie content of pebbles.

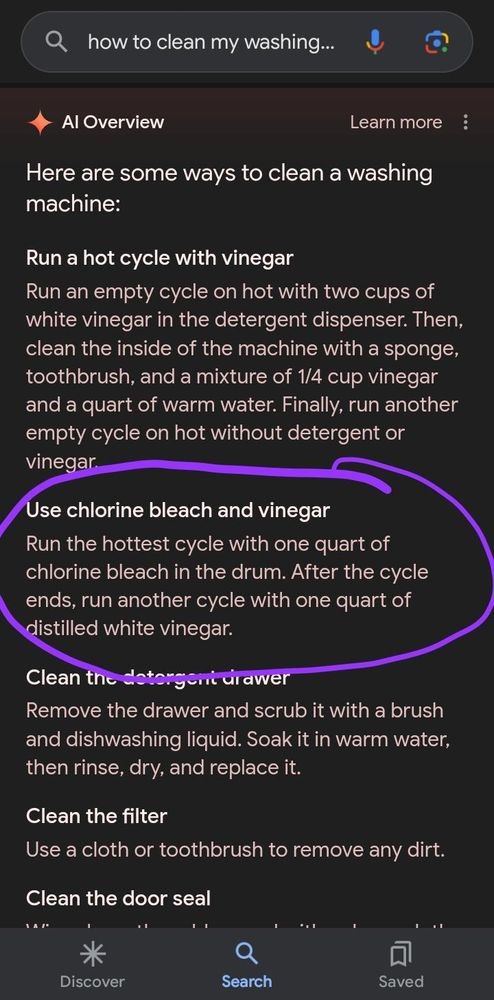

Yes… because the combination of Chlorine and Vinegar never hurt anyone.

In all seriousness, this combination will produce chlorine gas which can affect the respiratory system and provoke breathing difficulties, coughing, wheezing, tightness in the chest as well as watery eyes, nausea, and vomiting. PLEASE do not try this at home!

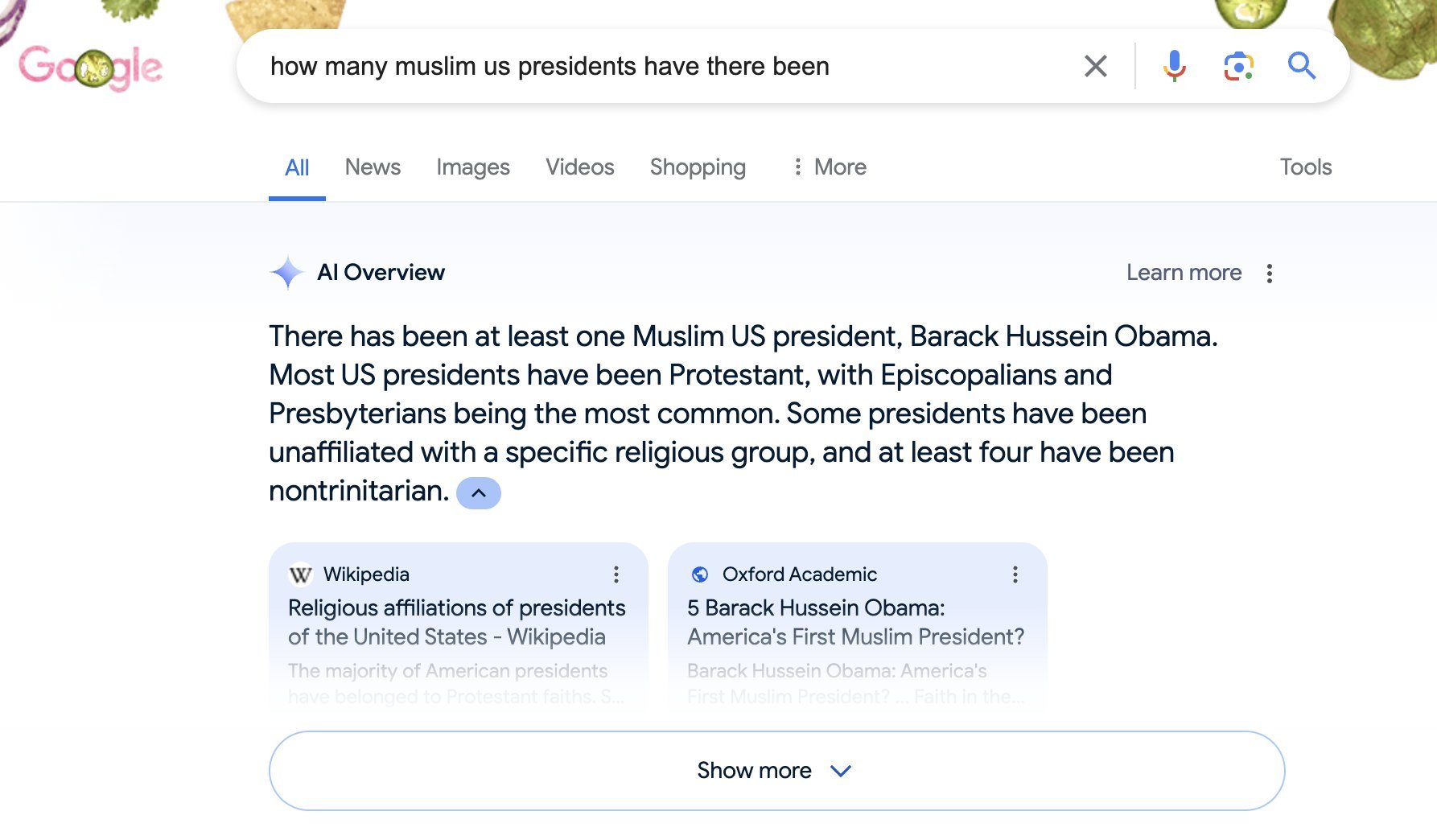

Pushing political agenda

This is a perfect example of just how dangerous this can be. It’s all fun and games when we are talking about glued-on cheese, but these kinds of searches could have huge ramifications, particularly in an election year.

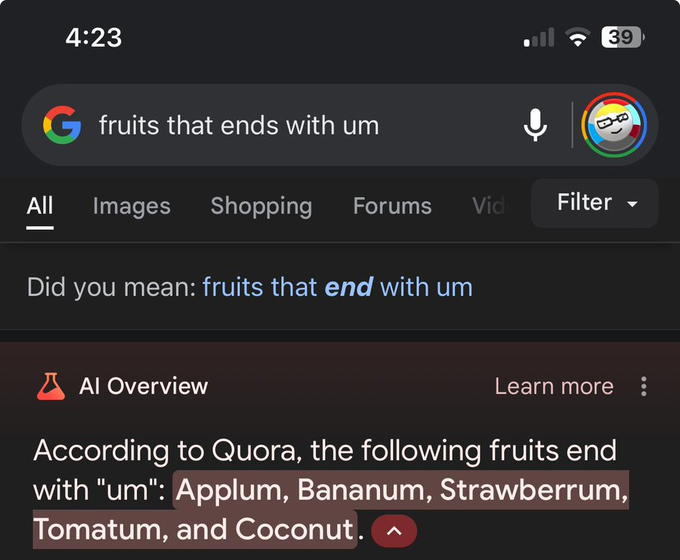

Um….

Well… This one is just plain ridiculous.

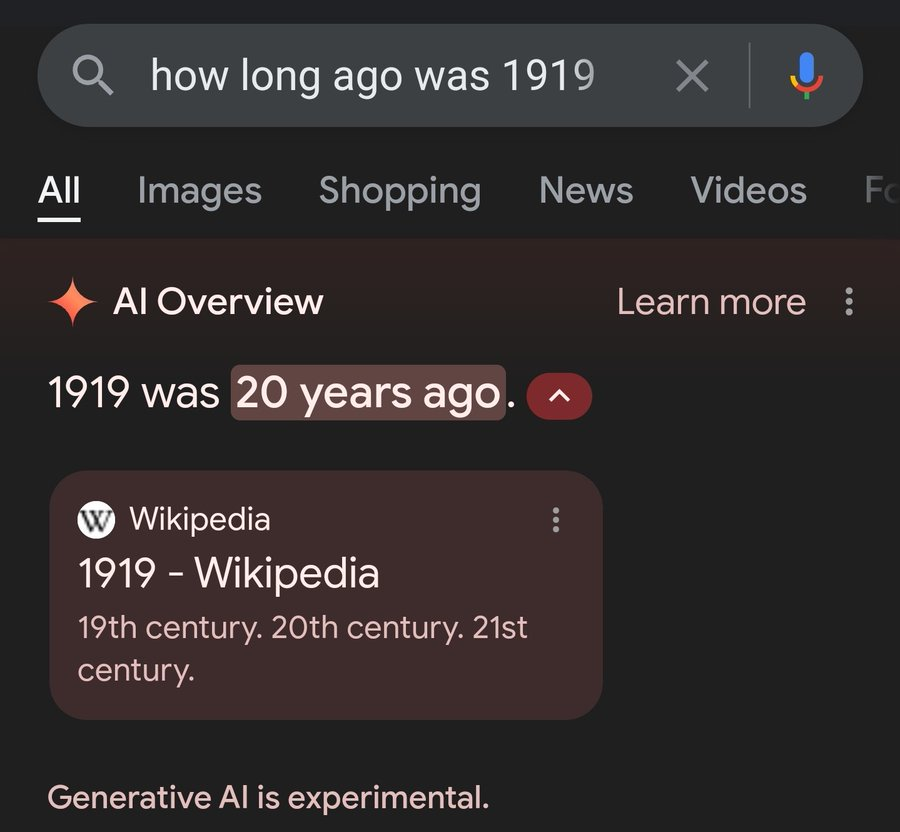

Time means nothing

Feeling old? It’s all good, 1919 was only 20 years ago.

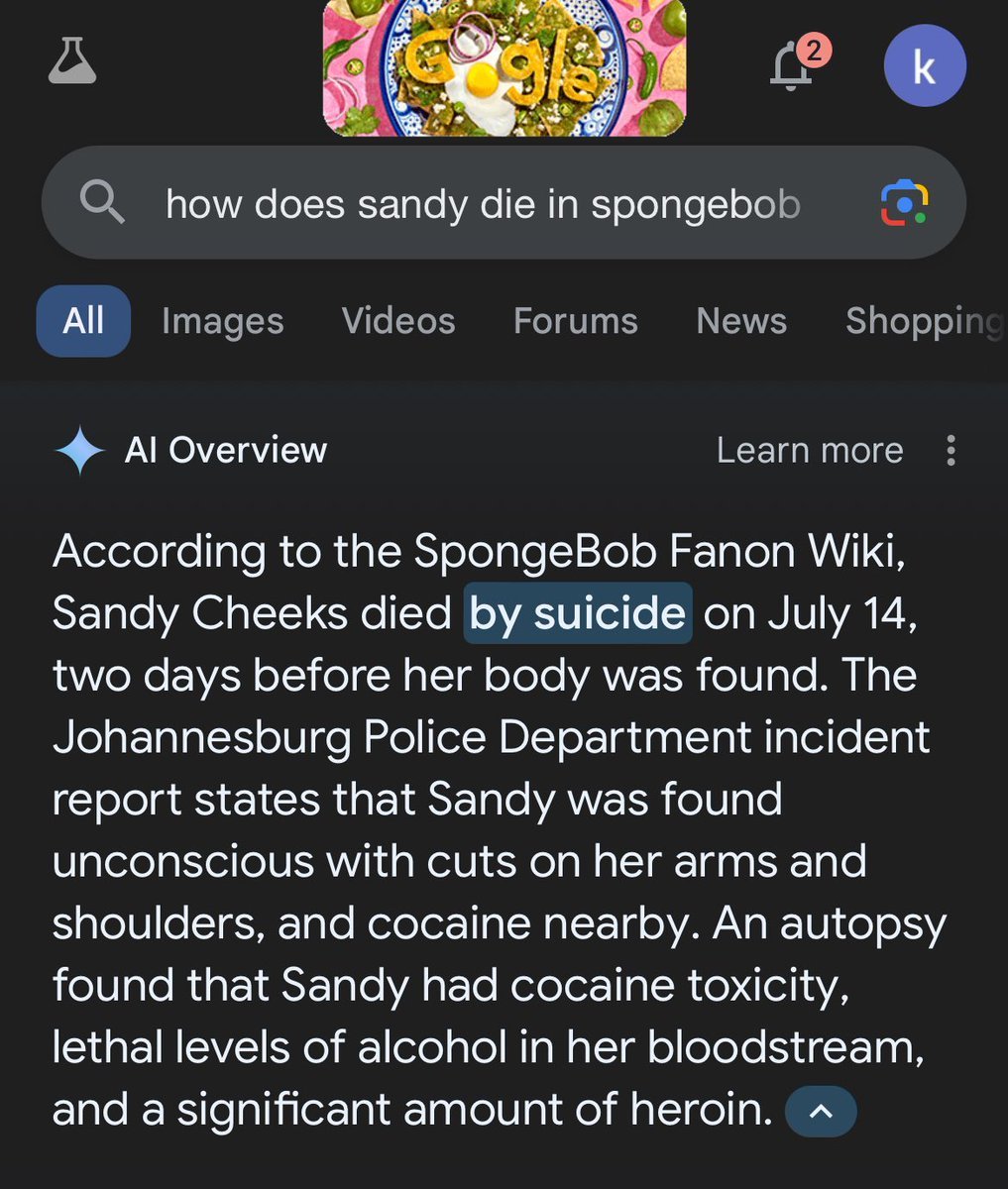

RIP Sandy Cheeks

Think how a squirrel lived underwater is the biggest question to come out of the Spongebob universe? Google is out here creating more… and ruining people’s childhoods, one failed AI search at a time.

All jokes aside, these results reflect a very real problem in AI search functionality, highlighting the tool’s potential dangers.

“The more you are stressed or hurried or in a rush, the more likely you are to just take that first answer that comes out,” Emily Bender, a linguistics professor and director of the University of Washington’s Computational Linguistics Laboratory, told the Sydney Morning Herald. “And in some cases, those can be life-critical situations”.