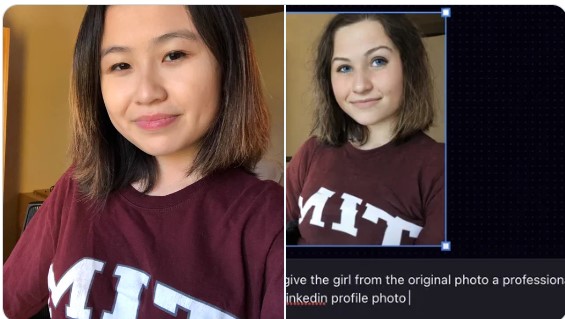

The internet is expressing concerns about the dangers of AI after an Asian MIT student asked an AI tool to make a professional LinkedIn picture of her, only for the tool to turn her white.

Taking to X (or Twitter as some may call it), student Rona Wang said “I was trying to get a Linkedin profile photo with AI editing & this is what it gave me”. She then added a photo of the results which show a shift in ethnicity.

The post has nearly 6 million views, with many expressing concern about the historical bias of AI.

Yuri Quintana, a researcher at Harvard University, asked whether “we can trust generative AI to not have bias?”, adding that Wang’s post showed some of the problems related to AI.

When asked which tool she had used to get the results, Wang replied saying https://playgroundai.com/.

The owner of the tool, Suhail Doshi, who has more than 240 0000 followers on X then responded to the post saying the team was “depleased” with the result and working to change it.

Fwiw, we’re quite displeased with this and hope to solve it.

— Suhail (@Suhail) July 14, 2023

He then went on to say, “the models aren’t instructible like that so it’ll pick any generic thing based on the prompt. Unfortunately, they’re not smart enough. Happy to help you get a result but it takes a bit more effort than something like ChatGPT”.

Many experts, including Dr Catriona Wallace who advises governments on the danger of AI, have expressed concern that AI will re-enforce historical biases that have harmed groups that were not represented in historical data.

Speaking to B&T she said: “So the challenge that we have with AI is that it’s been trained on existing historical data sets that already have some of society’s unfairness and biases built into it. And so when we come to looking at financial data, then if women have not been well represented, or indigenous groups or minority groups have not been well represented in the data sets that are then training algorithms that are going to do future credit or loans, then we’re just hard-coding society’s existing ills into the machines that will be automating what we do”.

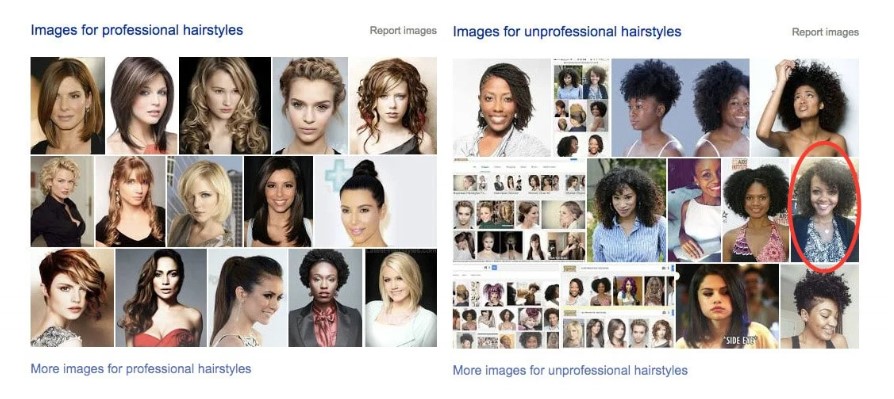

This is not the first time concerns about technology and racist bias has been expressed. Back in 2016, a female engineer exposed the natural racist bias of Google when she compared results for ‘professional hairstyles’ vs ‘unprofessional hairstyles’. The vast majority of ‘professional’ hairstyles were straight – a hair texture usually held by Caucasians, whilst afro and curly hair came up when she searched ‘unprofessional hair’.